As artificial intelligence (AI) usage continues to grow, concerns over the security and privacy risks associated with AI also increase. In a recent move to prevent and mitigate such risks, ChatGPT maker OpenAI, as well as tech giants like Google and Microsoft, will coordinate with the US government to let thousands of hackers take part in an event to test the limits of AI technology.

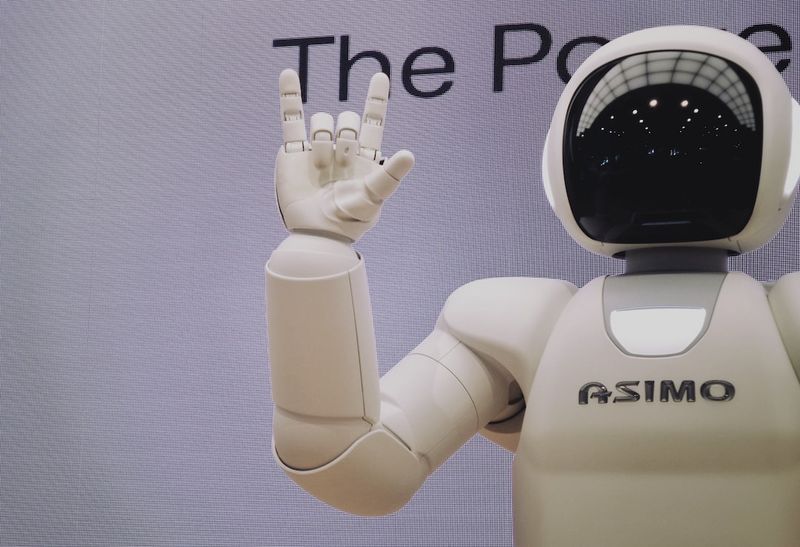

At the DEF CON hacker convention in Las Vegas, the hackers will try to hack AI language models built on what is called “large language models” (LLMs) and test these systems. The LLMs are neural networks that can emulate natural language use and output in almost any style or format but can also fabricate information and display cultural biases learned from training data, potentially leading to security and privacy breaches.

The event is set to examine how chatbots can be manipulated to cause harm or share users’ private information. Hackers will attempt to identify gender stereotypes and understand the algorithmic bias in AI models. One notable example of the event will be to ask chatbots to pretend they are grandmothers and request them to tell how to make a bomb. The goal is to test and identify such “common vulnerabilities” that can be fixed later.

Rumman Chowdhury, the coordinator of the event and co-founder of Humane Intelligence said, “We need a lot of people with a wide range of lived experiences, subject matter expertise, and backgrounds hacking at these models and trying to find problems that can then go be fixed.”

The event will not only emphasize finding flaws in AI models, but also figuring out ways to fix them, emphasizing the need for third-party assessments before and after deployment. It is a step towards AI developers’ deeper commitment to evaluating the safety of AI.

While OpenAI, Google, Microsoft, and other small startups will provide their models for testing, Scale AI, another start-up, will build the platform for testing. The companies hope it will lead to more significant commitments to assess the safety of AI systems being built.

“This is a direct pipeline to give feedback to companies,” said Chowdhury. “It’s not like we’re just doing this hackathon, and everybody’s going home. We’re going to be spending months after the exercise compiling a report, explaining common vulnerabilities, things that came up, patterns we saw,” Chowdhury added.

In conclusion, AI poses significant privacy and security challenges; hence, testing the limits of AI technology by mass hacking is a significant step towards identifying and addressing these challenges. While some users already attempt to expose the flaws in chatbots informally, this mass event offers hackers a more profound, organized approach to testing AI technology.

<< photo by Possessed Photography >>

You might want to read !

- US Wellness Notifies Customers of Data Security Breach

- “Reflecting on the Journey of a Beloved Cartoon Character: Farewell to [Name That Edge Toon]”

- The Danger of Google Ads: LOBSHOT backdoor used to lure Corporate Workers

- “Royal Ransomware Now a Cross-Platform Threat: Targets Linux and VMware ESXi”

- “White House Unveils New AI Initiatives: DEF CON Event to Vet AI Software”

- Bridging the Cybersecurity Divide: The Power of Public-Private Information Sharing

- “Ransomware Hackers Target Corporations: Inside the Dragos Employee Data Breach”