Generative AI in Cybersecurity: Separating Hype from Reality

The increasing prevalence of generative AI in cybersecurity has been touted by many of the industry’s biggest players as the answer to all their problems. Microsoft’s Security Copilot, Google’s large language model, Recorded Future’s AI-assistant, IBM’s AI-powered security offering, and Veracode’s new machine learning tool all incorporate generative AI to defend networks and outmaneuver hackers. The hype around AI’s potential is certainly warranted, but many are uncertain about how this technology can be beneficially deployed in security contexts. This article examines generative AI’s potential, security risks, and what the future might hold.

The Potential of Generative AI in Cybersecurity

Generative AI tools are designed to replicate human speech and interact with users. The underlying machine learning technologies have already been deployed for spam filtering, anti-virus, and phishing-detection. Governments, investors, researchers, and cybersecurity executives alike believe that generative AI could bring a fundamental shift in human-computer interaction, making computers more intuitive to the way we naturally do things. For instance, natural language processing techniques could enable machines and humans to interact in new ways and uncover unpredictable benefits. During the RSA Conference, the hype around AI was palpable as everyone claimed that generative AI was the “Holy Grail” of cybersecurity.

The Reality of Generative AI in Cybersecurity

While the potential of generative AI in cybersecurity is significant, the reality is different. The technology requires careful evaluation, and its limitations should be taken into consideration. AI companies, one of the few sectors still attracting venture capital in a slowing economy, have taken advantage of the hype. However, security practitioners have expressed concern as some outfits are releasing products claiming to use generative AI without any significant improvement over systems already in place. Therefore, the biggest challenge facing those working in generative AI is separating sales tactics from what the technology can actually do.

Security Risks of Generative AI

Generative AI is vulnerable to data poisoning attacks or attacks on their underlying algorithms which could have far-reaching consequences. For instance, “prompt injection” attacks can manipulate the models in unforeseen ways. Furthermore, explainability is a crucial issue with generative AI. The underlying process is often invisible, leading to unjustifiable decisions based on incorrect output. The black-box nature of these advanced AI systems is unprecedented, making dealing with the technology challenging. Because of these challenges, operators of safety-critical systems are worried about the speed with which large language models are being deployed.

Editorial: Is Generative AI Worth the Risk?

Generative AI is a game-changing technology that can transform cybersecurity. However, we must make sure that this technology delivers on its promise. The hype around generative AI must be met with a cautious approach. Security practitioners must take a risk-aware approach, evaluate the potential of generative AI, and take actions to reduce the risks. The ultimate goal is to create a safer and more secure digital world.

Advice: Actions to Take Now

There are several steps that organizations should take to ensure that generative AI is deployed safely and successfully. Organizations should first evaluate the potential of generative AI and consider how it fits into their existing security architecture. Security teams should also take a risk-aware approach, recognizing the potential risks and understanding the limitations of generative AI.

Another crucial step is to invest in explainability and trust algorithms. If an organization cannot explain why an AI system made a particular decision, there is no way to verify its accuracy and justification. Furthermore, organizations must follow and adhere to local and global privacy regulations, ensuring personal data is protected.

Finally, organizations should work together with other stakeholders to exchange knowledge and experience, creating a safer and more secure cyber landscape. The risks of generative AI in cybersecurity are real, but if we work together and take the right steps, we can take advantage of this technology’s incredible potential.

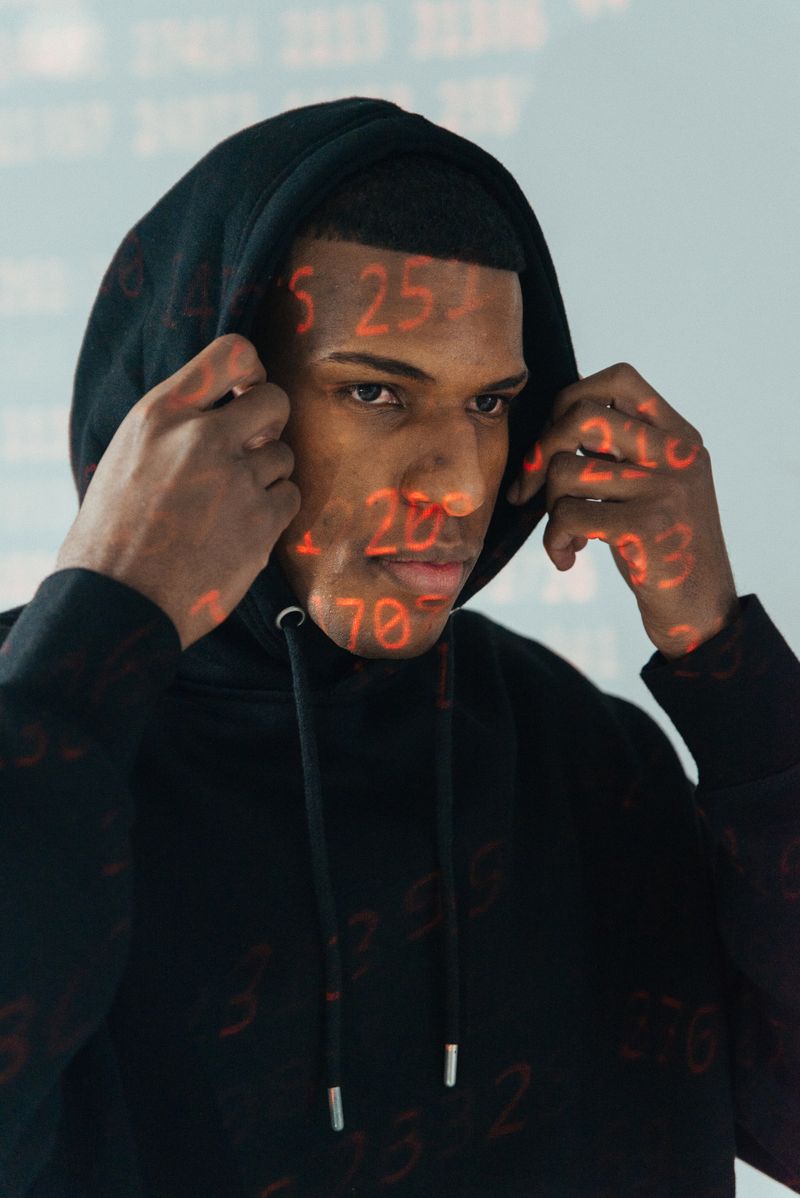

<< photo by Google DeepMind >>

You might want to read !

- The Limits of “Impossible Travel” Flags in BEC Attacks: Attackers Using Residential IP Addresses

- The Threat Posed by Iranian Hackers Using an Innovative Windows Kernel Driver.

- Why Google’s New Bug Bounty Program for Mobile Apps is a Game Changer

- “Balancing the Benefits and Risks: Exploring the Impact of Generative AI on User Empowerment and Security”

- The State of Cloud Security: Microsoft Azure VMs Among the Targets of Recent Cyberattack

- Microsoft Teams’ Security Features Under Scrutiny As Cyberattacks Increase

- “Insights from RSAC Innovation Sandbox Judge: Exploring the Evolution of Cybersecurity Innovation”

- Why Enterprises Should Take Steps to Adapt to the Shortening of TLS Certificate Validity

- Rheinmetall Continues Military Operations Unhindered Despite Ransomware Attack

- China’s Order to Stop Using Micron Chips Escalates Feud with US Tech Industry

- “Unpacking the North Korean Cyber Threat: Kimsuky Hackers Ramp Up with Advanced Reconnaissance Malware”

- “Strengthening Security in Software Development: Red Hat’s Latest Tool Offerings”