Geopolitics: Does the World Need an Arms Control Treaty for AI?

Introduction

At the dawn of the atomic age, scientists realized the need to control the weapons of mass destruction they had created. This realization marked the beginning of the arms control era. Today, a similar awakening is happening among scientists and researchers in the field of artificial intelligence (AI). With concerns about the potential extinction threat posed by AI, there is a growing discussion about the need for an arms control treaty for AI. Organizations like the International Atomic Energy Agency (IAEA) provide a model for designing systems to control the proliferation of AI. While early efforts to control AI show promise, there are challenges that need to be addressed. This report examines the prospects and challenges of an AI arms control treaty and provides recommendations for moving forward.

The Arms Control Framework for AI

The key ingredients for building an advanced AI system are data, algorithms, and computing power. While data and algorithms are difficult to control, the production of high-end graphics processing units (GPUs), which are essential for building cutting-edge language models, is dominated by a single company, Nvidia. This presents an opportunity to use arms control concepts to limit the proliferation of the most powerful AI models. The United States has already taken steps to control the export of high-end GPUs to China, with the support of key manufacturers in the Netherlands, Japan, South Korea, and Taiwan. These measures demonstrate how ad hoc controls on hardware can be integrated into an international body in the future.

The Role of Carrots and Sticks

To build an international control regime for AI, it is important to consider both the governance of the technology and the benefits it can provide. By controlling the distribution of advanced AI chips and licensing data centers used for training models, a regime can prevent the spread of advanced AI systems while allowing countries to access capable models for peaceful use. Access controls can be maintained through online apps or APIs, which allow for monitoring and beneficial access. However, it remains unclear whether economic incentives exist for countries to participate in an AI arms control regime.

The Challenges of Prevention and Control

One of the challenges in building an international regime for AI is the lack of clarity about the catastrophic harms AI could pose. While there are concerns about the potential for AI to surpass human intelligence and pose a threat to humanity, these risks are theoretical and draw from the realm of science fiction. This lack of clarity makes it difficult to build the consensus necessary for an international non-proliferation regime. Policymakers are currently more focused on immediate risks, such as biased AI systems and the spread of misinformation. Furthermore, the nature of AI models as easily copied and spread software presents a significant challenge to maintaining access controls.

The Future of AI Arms Control

The future of AI models and their training methods poses challenges to the current regime of controlling AI through hardware. As algorithms become more efficient, the need for powerful data centers and high-end hardware may diminish. Decentralized training methods using lagging-edge chips and the proliferation of open-source models further erode the control over AI models. Past efforts to control dangerous weapons also serve as a reminder of the limitations of export restrictions. Major powers are unlikely to limit the development of a technology they consider important for security without military substitutes.

Conclusion

While the idea of an AI arms control treaty has gained attention, there are significant challenges to overcome. The lack of clarity about the catastrophic harms AI could pose and the rapid evolution of AI models make it difficult to build consensus and maintain access controls. However, arms control regimes have evolved over time, and lessons can be learned from the nuclear era. As AI technology progresses, it is essential to have ongoing discussions on the potential risks and benefits of AI and to explore innovative approaches to governance. It is crucial for policymakers to balance the immediate risks of AI with long-term initiatives to ensure global security and ethical AI development.

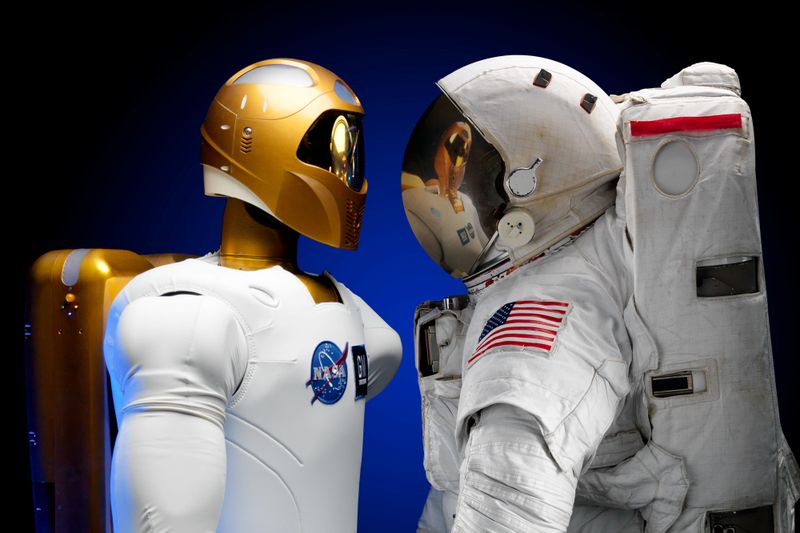

<< photo by Pixabay >>

The image is for illustrative purposes only and does not depict the actual situation.

You might want to read !

- Evaluating the Effectiveness of Side-Channel Attack Mitigations: MIT Introduces New Framework

- UAE and Israel Join Forces to Combat Cyber Threats: A Game-Changing Intelligence Partnership

- ChatGPT and the Imperative for Secure Coding: Harnessing Human-like Abilities

- The Rising Threat of Newbie Akira Ransomware in the Linux World

- The Expanding Reach of Russian Espionage and State-Sponsored Cybercrime

- Raising Awareness: The Rescue of 2,700 Victims Deceived into Working for Cybercrime Syndicates

- US Offers Record $10M Reward for Information on Russian Ransomware Suspect

- Enhancing Security and Convenience: 1Password Introduces Single Sign-On Integration for OIDC-Supported Identity Providers

- Technology and Advocacy Collide: Understanding the Motives Behind Trans-Rights Hacktivists

- The Great Leak: Genworth Financial Exposes 2.7M SSNs in Data Breach

- Nokod Raises $8 Million in Funding to Bolster Security for Low Code/No-Code Custom Apps

- Trojan-Horse Tactics Enhance Political Tension Between China and Taiwan

- Navigating the Cybersecurity Battlefield: 6 Lessons from the ChatGPT Frenzy

- Revolutionizing Cybersecurity Assessment: Researchers Unveil Innovative Evaluation Framework

- Saudi Arabia’s Cyber Capabilities: Unveiling the Kingdom’s Rise to Cyber Power

- LetMeSpy: A Major Data Breach Compromises Users’ Personal Information

- Data Breach Impact: Over 130 Organizations and Millions of Individuals Affected by MOVEit Hack